Original Author: Mohamed Baioumy & Alex Cheema

Translated by: BeWater

Due to the length of the full report, we have split it into two parts. This is the first part, which mainly discusses the core framework of AI x Crypto, specific examples, and opportunities for builders. If you want to view the full translation, please click this link.

1. Introduction

Artificial Intelligence (AI) will cause unprecedented social transformation.

With the rapid development of AI and the new possibilities it creates in various industries, it will inevitably lead to widespread economic disruption. The crypto industry is no exception. We observed three major DeFi attacks in the first week of 2024, with $76 billion at risk in DeFi protocols. With AI, we can examine the security vulnerabilities of smart contracts and integrate AI-based security layers into blockchain.

The limitation of AI lies in the fact that bad actors can abuse powerful models, as evidenced by the malicious spread of deepfakes. Fortunately, various advances in cryptography will introduce new capabilities to AI models, greatly enriching the AI industry while addressing some serious flaws.

The convergence of AI and the crypto field will give birth to numerous projects worth attention. Some of these projects will provide solutions to the aforementioned issues, while others will combine AI and Crypto in superficial ways that do not bring real benefits.

In this report, we will introduce the conceptual framework, specific examples, and insights to help you understand the past, present, and future of this field.

2. AI x Crypto's core framework

In this section, we will introduce some practical tools to help you analyze AI x Crypto projects in more detail

2.1 What is an AI (Artificial Intelligence) x Crypto (Cryptocurrency) project?

Let's review some examples of projects that use both crypto and AI and discuss whether they truly belong to AI x Crypto projects.

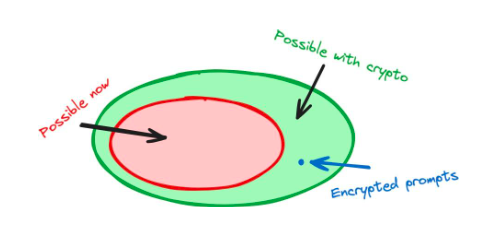

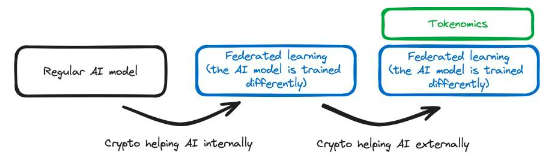

This case demonstrates how cryptography can help and enhance an AI product - using cryptographic methods to change the training approach of AI. This results in a product that cannot be achieved solely with AI technology: a model that can accept encrypted instructions.

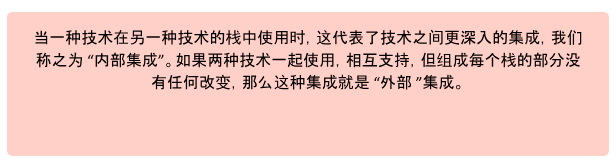

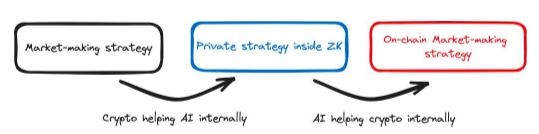

Figure 1 : Making internal modifications to the AI stack using cryptographic techniques can generate new functionalities. For example, FHE allows us to use encrypted instructions

In this case, AI technology is used to improve encryption products - which is the exact opposite of what we discussed earlier. Dorsa provides an AI model that makes the process of creating secure smart contracts faster and cheaper. Although it is off-chain, the use of AI models still contributes to encryption projects: smart contracts are typically at the core of encryption project solutions.

Dorsa's AI capabilities can uncover vulnerabilities overlooked by humans, thus preventing future hacking attacks. However, this particular example does not leverage AI to give encryption products capabilities that were previously unattainable - the AI only makes this process better and faster. Nevertheless, this is an example of AI technology (model) improving encryption products (smart contracts).

LoverGPT is not an example of Crypto x AI. We have already established that AI can help improve the cryptographic tech stack, and vice versa, which can be illustrated through the examples of Privasea and Dorsa. However, in the case of LoverGPT, the encryption part and the AI part do not interact with each other, they simply coexist in the product. To consider a project as an AI x Crypto project, it is not enough to only have AI and Crypto contribute to the same product or solution - these technologies must intertwine and collaborate to generate a solution.

Encryption technology and AI can be directly combined to generate better solutions. Combining them can make them work better together in the overall project. Only projects involving collaborative work between these technologies are classified as AI X Crypto projects.

2.2 How AI and Crypto Promote Each Other

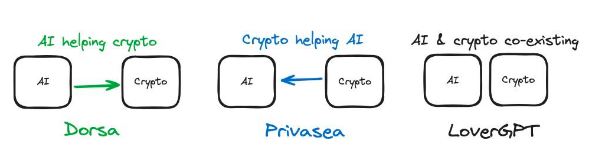

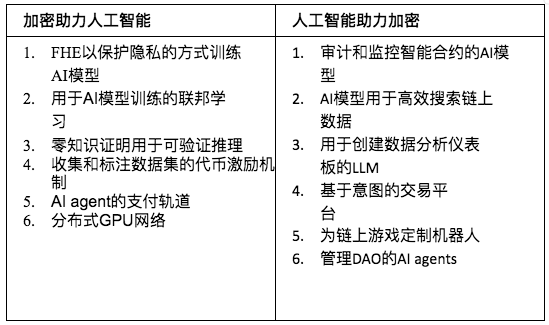

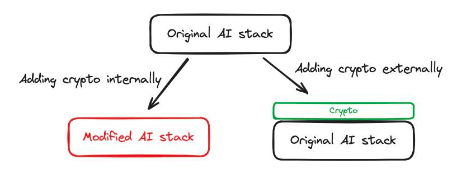

Figure 2: The combination of AI and crypto in three different products

Let's review the previous case studies. In Privasea, FHE (i.e., encryption technology) is used to generate AI models that can accept encrypted input. Therefore, we are using a Crypto (encryption) solution to improve the training process of AI, so Crypto is helping AI. In Dorsa, AI models are used to review the security of smart contracts. AI solutions are used to enhance crypto products, so AI is helping Crypto. When evaluating the intersection of AI X Crypto projects, this brings us an important dimension: is Crypto being used to assist AI or is AI being used to assist crypto?

This simple question can help us identify key aspects of the current use case, which is the critical problem to be addressed. In the case of Dorsa, the desired outcome is a secure smart contract. This can be achieved by skilled developers, and Dorsa leverages AI to improve the efficiency of this process. However, fundamentally, we only care about the security of the smart contract. Once the key problem is identified, we can determine if AI is helping Crypto or if Crypto is helping AI. In some cases, there may not be a meaningful interaction between the two (e.g., LoverGPT).

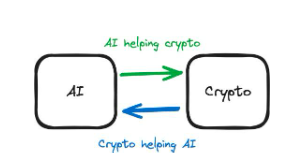

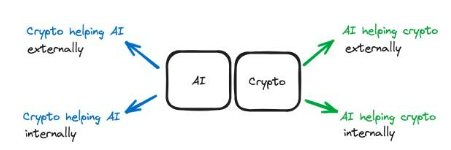

The table below provides several examples in each category.

Table 1: How Crypto and AI are Combined

You can find more than 150 AI x Crypto project directories in the appendix. If we missed anything or if you have any feedback, please contact us!

2.2.1 Summary

Both AI and Crypto have the ability to support each other's technology to achieve their goals. The key in evaluating projects is to understand whether the core of the project is an AI product or a Crypto product.

Figure 3: Distinction

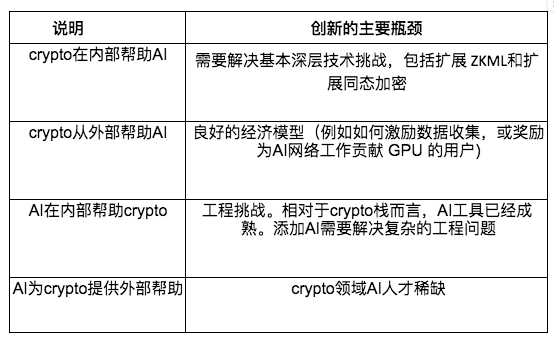

2.3 Internal and External Support

Let's take an example of how Crypto can help AI. When the specific set of technologies that make up AI changes, the capabilities of the AI solution as a whole also change. This set of technologies is called a stack. The AI stack includes the mathematical ideas and algorithms that make up various aspects of AI. The specific techniques used to handle training data, train models, and perform model inference are all part of the stack.

In the stack, there is a deep connection between each part - the specific combination of technologies determines the function of the stack. Therefore, changing the stack is equivalent to changing the goals that the entire technology can achieve. Introducing new technology into the stack can create new technological possibilities - Ethereum has added new technology to its cryptographic stack, making smart contracts possible. Similarly, changes to the stack can also allow developers to bypass problems that were previously considered inherent to the technology - Polygon's changes to the Ethereum cryptographic stack have allowed them to reduce transaction fees to levels previously thought to be impossible.

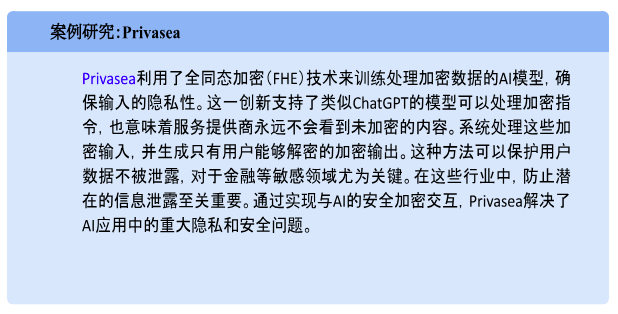

Internal Support: Cryptographic technology can be used to make internal modifications to the AI stack, such as changing the technical means of training models. We can introduce FHE technology in the artificial intelligence stack, and Privasea is an example that directly integrates an encrypted part in the AI stack, creating a modified AI stack.

External Support: Cryptography is used to support AI-based functionality without modifying the AI stack. Bittensor is an example that incentivizes users to contribute data - which can be used to train AI models. In this case, there are no changes to the training or usage of the models, and the AI stack remains unchanged. However, in the Bittensor network, the use of economic incentives helps the AI stack better achieve its goals.

Figure 4: Explanation discussed above

Similarly, AI can provide internal or external support for Crypto:

Internal support: AI technology is used within the crypto stack. AI is on-chain and directly connected to parts of the crypto stack. For example, on-chain AI agents manage a DAO. This AI not only assists the crypto stack; it is an integral part of the tech stack, deeply embedded in it to ensure the smooth functioning of the DAO.

External support: AI provides external support for the crypto stack. AI is used to support the crypto stack without making internal changes to it. Platforms like Dorsa use AI models to ensure the security of smart contracts. AI, being off-chain, functions as an external tool to make the process of writing secure smart contracts faster and cheaper.

Figure 5: This is the upgraded model, showing the differences between internal and external support.

The first step in analyzing any AI x Crypto project is to determine which category it belongs to.

2.4 Determine Bottlenecks

Compared to external support, internal support characterized by deep integration of technology often presents more technical difficulties. For example, if we want to modify the AI stack by introducing FHE or Zero-Knowledge Proofs (ZKPs), we need technical personnel with considerable expertise in both cryptography and AI. But few people belong to this interdisciplinary field. These companies include Modulus, EZKL, Zama, and Privasea.

Therefore, these companies require a substantial amount of funding and scarce talent to advance their solutions. Enabling users to integrate artificial intelligence in smart contracts also requires in-depth knowledge; companies like Ritual and Ora must address complex engineering issues.

On the other hand, external support also has bottlenecks, but they usually involve lower levels of technological complexity. For example, adding cryptocurrency payment functionality to AI agents does not require extensive modifications to the models. It can be relatively easy to implement. While building a ChatGPT plugin that allows ChatGPT to pull data from DeFi LLama webpages may pose engineering challenges for AI engineers.

Get statistical data is not technically complex, but few AI engineers are members of the crypto community. Although this task is not technically complex, there are very few AI engineers who can use these tools, and many people are unaware of these possibilities.

2.5 Measurement utility

There will be good projects in all four categories.

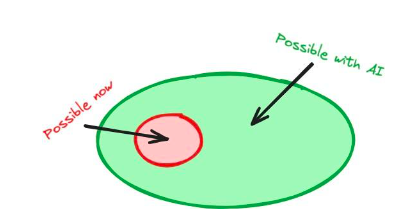

If artificial intelligence is integrated into the cryptographic technology stack, smart contract developers will be able to access on-chain AI models, increasing the number of possibilities and potentially bringing about a wide range of innovations. The same applies when integrating cryptography into the AI stack - deep technological fusion will lead to new possibilities.

Figure 6: Incorporating artificial intelligence into the encryption stack for developers to access new functionalities.

When artificial intelligence provides external assistance to encryption, the integration of AI is likely to improve existing products while generating fewer breakthroughs and introducing fewer possibilities. For example, using AI models to write smart contracts may be faster, cheaper, and more secure than before, but it is unlikely to produce smart contracts that were previously impossible. The same applies to the assistance of encryption technology to AI - token incentives can be used for the AI stack, but it is unlikely to redefine how we train AI models.

In summary, integrating one technology into another technology stack may create new functionalities, while using technologies outside of the stack may enhance usability and efficiency.

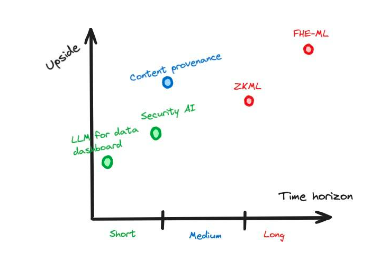

2.6 Project Evaluation

We can estimate partial benefits of a specific project based on the quadrant it falls into, as internal support between technologies can yield greater returns. However, estimating the total risk-adjusted returns of a project requires considering more factors and risks.

One factor to consider is whether the project under consideration is useful in the context of Web2, Web3, or both. AI models with FHE functionality can be used to replace AI models without FHE functionality - the introduction of FHE is beneficial in both domains, where privacy is valuable in any case. However, integrating AI models into smart contracts can only be used in the Web3 environment.

As mentioned earlier, the integration of AI and encryption can occur internally or externally to the project, which will also determine the potential for project growth. Projects involving internal support tend to generate new capabilities and greater efficiency improvements, making them more valuable.

We must also consider the time span for the maturity of this technology, as this will determine how long people need to wait for returns.

Investment in the project. To do this, the current progress can be analyzed and bottleneck issues related to the project can be identified (see Section 2.4).

Figure 7: A hypothetical example illustrating potential upside compared to time span

2.7 Understanding Complex Products

Some projects involve combinations of the four categories we described, rather than just one category. In such cases, the risks and rewards associated with the project often multiply, and the time span for project implementation is also longer.

In addition, you must also consider whether the overall project is superior to the sum of its parts—a project that has everything often falls short of meeting the end user's needs. An emphasized approach often produces excellent products.

2.7.1 Example 1: Flock.io

Flock.io allows the "splitting" of training models among multiple servers, so that no party can access all the training data. By being able to directly participate in the training of the model, you can contribute your own data to the model without leaking any data. This is beneficial for protecting user privacy. As the artificial intelligence stack (model training) changes, this involves encryption to help artificial intelligence internally.

Additionally, they also use encrypted tokens to reward individuals participating in the model's training and use smart contracts to economically punish individuals who disrupt the training process. This does not change the process involved in training the model, and the underlying technology remains unchanged, but all parties need to adhere to the on-chain confiscation mechanism. This is an example of how encryption technology helps artificial intelligence externally.

Most importantly, encryption technology introduces a new capability internally to help artificial intelligence: models can be trained through a decentralized network while maintaining data privacy. However, cryptocurrency that helps artificial intelligence externally does not introduce new capabilities because tokens are only used to incentivize users to contribute to the network. Users can be compensated with fiat currency, and using cryptocurrency as an incentive is a better solution that can improve system efficiency, but it does not introduce new capabilities.

Figure 8: Flock.io Illustration and changes in the stack, where changes in color indicate alterations made internally

2.7.2 Example 2: Rockefeller Robot

Rockefeller Robot is a trading bot that operates on the blockchain. It uses artificial intelligence to determine which transactions to make. However, since the AI model itself doesn't run on the smart contract, we rely on service providers to run the model for us and communicate its decisions to the smart contract, proving that they are not lying to the contract. If the smart contract doesn't verify whether the service provider is lying, the provider may conduct harmful transactions on our behalf. Rockefeller Robot allows us to use ZK proofs to show the smart contract that the service provider is not lying. Here, ZK is used to modify the AI stack. The AI stack needs to embrace ZK technology, or else we wouldn't be able to use ZK to prove the model's decisions to the smart contract.

By utilizing ZK technology, the resulting outputs of the AI model have verifiability and can be queried from the blockchain, which means that the AI model operates within the encrypted stack. In this scenario, we incorporate the AI model in the smart contract to fairly determine transactions and prices. This would not be feasible without artificial intelligence.

Figure 9: Rockefeller robot and stack change diagram. The color change indicates a change in the stack (internal support).

3. Worth Investigating Questions

3.1 Encryption Field and Deepfake Insights

On January 23rd, an AI-generated voice message falsely claiming to be President Biden urged Democrats not to vote in the 2024 primaries. Less than a week later, a financial worker lost $25 million due to a deepfake video call impersonating their colleague. Meanwhile, on X (formerly Twitter), AI-generated explicit photos of Taylor Swift garnered 45 million views and sparked widespread outrage. These events, which took place in the first two months of 2024, are just a glimpse of the disruptive impact deepfakes have had in the realms of politics, finance, and social media.

3.1.1 How did they become a problem?

The creation of forged images is not a new phenomenon. In 1917, The Strand magazine published intricately cut-out photographs designed to resemble fairies, which many believed to be compelling evidence of supernatural beings.

Figure 10 : One of the photos of the "Cottingley Fairies". The creator of Sherlock Holmes, Sir Arthur Conan Doyle, presented these faked photos as evidence of supernatural phenomena.

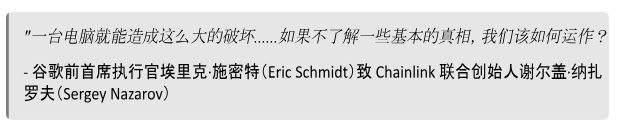

As time has passed, forgery has become increasingly easy and inexpensive, greatly enhancing the speed of spreading false information. For example, during the 2004 United States presidential election, a manipulated photo falsely showed Democratic nominee John Kerry participating in a protest with Jane Fonda, a controversial American activist. The Cottingley Fairies required careful staging, with cut-out illustrations from children's books made out of cardboard, while this forgery was a simple task done with Photoshop.

Figure 11 : This photo suggests John Kerry and Jane Fonda appearing together at an anti-Vietnam War rally. It was later discovered to be a faked image, created by combining two existing photographs using Photoshop.

However, the risks posed by fake photos have decreased as we have learned how to identify editing traces. In the case of the "Tourist Guy", amateur enthusiasts were able to recognize manipulated images by observing inconsistencies in the white balance of different objects within the scene. This is a result of increased public awareness of false information; people have learned to pay attention to the traces of photo editing. The word "Photoshopped" has become a common term: signs of image manipulation are widely recognized, and photo evidence is no longer seen as unalterable proof.

3.1.1.1 Deepfakes make forgery easier, cheaper, and more realistic

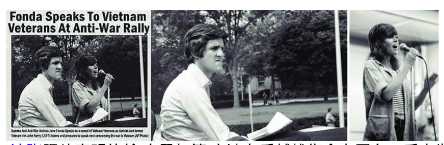

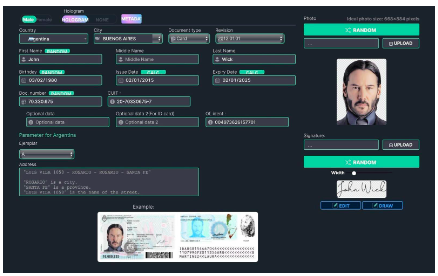

In the past, forged documents were easily detected by the naked eye, but deepfake technology has made it simple and inexpensive to create images that are almost indistinguishable from real photos. For example, the OnlyFake website uses deepfake technology to generate realistic fake ID photos in just minutes, for only $15. These photos are used to bypass the Know Your Customer (KYC) fraud protection measures of OKX, a cryptocurrency exchange. In the case of OKX, these deepfake IDs fooled their trained employees, highlighting that even professionals can no longer rely on visual identification to detect fraud based on deepfakes.

Due to the deepfaking of images, people have become more reliant on video evidence. However, deepfakes will soon severely undermine the credibility of video evidence. A researcher from the University of Texas at Dallas successfully bypassed identity verification implemented by a KYC provider using a free deepfake face-swapping tool, as described in this article. This represents a significant advancement, as creating high-quality videos used to be expensive and time-consuming.

In 2019, it took someone two weeks and $552 to create a 38-second deepfake video of Mark Zuckerberg, which also had noticeable visual imperfections, as shown in this article. Today, we can create realistic deepfake videos for free in just minutes.

Figure 12: OnlyFake panel for creating fake IDs in minutes

3.1.1.2 Why is video so important?

Before deepfake technology emerged, videos were considered reliable evidence. Unlike easily falsifiable images, videos have historically been difficult to manipulate, making them a recognized form of reliable evidence in court. This has made deepfake videos particularly dangerous.

At the same time, the emergence of deepfakes can also lead to the denial of genuine videos, as seen in the case of a video of US President Biden that was wrongly labeled as a deepfake. Critics based their claims on Biden's lack of eye blinking and differences in lighting, which have been proven to be inaccurate. This raises the question: deepfakes not only make fake videos look real, but also make real videos look fake, further blurring the boundaries between reality and fiction and increasing the difficulty of accountability.

Deepfakes enable large-scale targeted advertising. We may soon see another version of YouTube, where the content, people, and locations are personalized for each viewer. An early example is Zomato's localized ads, where actor Hrithik Roshan orders food from popular restaurants in the viewer's city. Zomato generates different deepfake ads tailored to each viewer's GPS location, introducing restaurants in their area.

3.1.2 What are the limitations of current solutions?

3.1.2.1 Consciousness

The current deepfake technology is already very advanced, enough to deceive trained experts. This allows hackers to bypass identity verification (KYC/AML) processes, even manual reviews. This indicates that we cannot distinguish deepfakes from real images with our eyes alone. We cannot simply be skeptical of images to prevent the spread of deepfakes: we need more tools to combat the proliferation of deepfakes.

3.1.2.2 Platforms

Without strong social pressure, social media platforms are not willing to effectively restrict deepfakes. For example, Meta bans deepfake videos with manipulated audio, but refuses to ban purely fabricated video content. They have disregarded the recommendations of their oversight board and have not removed a deepfake video that falsely depicts President Biden touching his granddaughter, as reported in this Reuters article.

3.1.2.3 Policies

We need to enact laws that effectively address the risks posed by new forms of deepfakes, while not limiting legitimate uses with minimal harm, such as in the fields of art or education, where the intention is not to deceive people. Incidents like the unauthorized dissemination of deepfake images of Taylor Swift have prompted legislators to enact stricter laws to combat such deepfake activities. In response to such cases, it may be necessary to strengthen online review processes legally, but the proposal to ban all AI-generated content has raised concerns among filmmakers and digital artists who fear it would unjustly restrict their work. Finding the right balance is crucial, or else legitimate creative applications will be stifled.

Advocating for lawmakers to raise the barriers to entry for training powerful models can ensure that large tech companies do not monopolize artificial intelligence. This could result in irreversibly concentrating power in the hands of a few companies – for example, the Executive Order 14110 on the safe, secure, and trustworthy development and use of artificial intelligence suggests strict requirements for companies with significant computational capabilities.

Figure 13: Vice President Kamala Harris applauds as President Joe Biden signs the first executive order on artificial intelligence in the United States

3.1.2.4 Technology

The first line of defense against abuse is to directly establish barriers in artificial intelligence models, but these barriers are constantly being broken. It is difficult to scrutinize AI models because we do not know how to use existing low-level tools to modify higher-dimensional behaviors. Furthermore, companies training AI models can use the implementation of barriers as an excuse to introduce censorship and bias into their models. This is problematic because large tech AI companies are not accountable to public opinion - they can freely manipulate their models to the detriment of users.

Even if the power to create powerful AI is not concentrated in dishonest companies, it may still be impossible to establish an AI that is both protected and unbiased. Researchers find it difficult to determine what constitutes abuse, making it challenging to handle user requests in a neutral and balanced manner while preventing abuse. If we cannot define abuse, it seems necessary to reduce the strictness of prevention measures, which could lead to a recurrence of abuse. Therefore, completely prohibiting the abuse of AI models is impossible.

One solution is to detect malicious deepfakes immediately after they appear rather than preventing their creation. However, deepfake detection AI models (such as those deployed by OpenAI) are becoming inaccurate and outdated. Although deepfake detection methods have become increasingly complex, the technology to create deepfakes is also becoming more complex at a faster pace - deepfake detectors are falling behind in the technological arms race. This makes it difficult for the media to identify deepfake news with certainty. AI is already advanced enough to create deepfakes that are indistinguishable from real footage.

Watermarking technology can discreetly mark deepfakes so that we can identify them wherever they appear. However, deepfakes do not always come with watermarks as they must be intentionally added. Watermarks are an effective method for companies (such as OpenAI) voluntarily marking their manipulated images. Nevertheless, watermarks can still be To remove or forge watermarks easily and bypass any watermark-based deepfake detection solutions. Watermarks might also be accidentally removed and most social media platforms automatically delete watermarks.

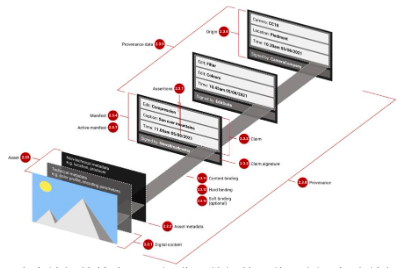

The most popular deepfake watermark technology is C2PA (Content Authenticity Initiative) which aims to prevent misinformation by tracking the source of media and storing this information in media metadata. C2PA is supported by companies like Microsoft, Google, and Adobe, making it likely to be widely adopted across the content supply chain and more popular than other similar technologies.

Unfortunately, C2PA also has its own weaknesses. Since C2PA stores the complete editing history of an image and verifies each edit using encryption keys controlled by editing software that complies with C2PA standards, we must trust these editing software. However, people are likely to accept edited images with valid C2PA metadata directly without considering whether they trust every party in the editing chain. Therefore, if any editing software is compromised or capable of malicious editing, it is possible to make others believe that the forged or maliciously edited image is authentic.

Figure 14: Example of an image containing a series of edits conforming to the C2PA standard metadata. Each edit is signed by a different trusted party, but only the final edited image is made public. Source: Real Photo vs. AI Generated Art: A New Standard (C2PA) Uses PKI to Show an Image's History

In addition, the encrypted signatures and metadata included in the C2PA watermark can be linked to specific users or devices. In some cases, C2PA metadata can connect all the images taken by your camera: if we know that an image comes from someone's camera, we can identify all the other images from that camera. This can help whistleblowers anonymize their photos when they are published.

All potential solutions will face a range of unique challenges. Despite the wide variation of these challenges - including limitations of social awareness, flaws in large tech companies, difficulties in implementing regulatory policies, and our own technological limitations.

3.1.3 Can encryption tackle this problem?

Open-source deepfakes models have already started circulating. Therefore, some might argue that there will always be ways to exploit deepfakes for abusive purposes; even if such practices are deemed illegal, some individuals may still opt to generate unethical deepfake content. However, we can address this issue by allowing malicious deepfake content to diverge from the mainstream. We can prevent people from perceiving deepfake images as real and create platforms that limit deepfake content. This section will introduce various solutions based on encryption technology to address the issue of misleading propagation caused by malicious deepfakes, while emphasizing the limitations of each approach.

3.1.3.1 Hardware authentication

A camera that undergoes hardware authentication embeds a unique proof with each photo it captures, validating that the photo was taken by that particular camera. This proof is generated by an irreplicable and tamper-proof chip unique to the camera, ensuring the authenticity of the image. Similar procedures can be applied to audio and video as well.

The authentication certificate tells us that the image was taken by a real camera, which means that we can usually trust that it is a photograph of a real object. We can flag images without this kind of proof. But if the camera captures a forged scene that looks like a real one, this method fails - you can simply aim the camera at a forged image. Currently, we can determine if a photo is taken from a digital screen by checking if the captured image is distorted, but scammers will find ways to hide these flaws (e.g., by using better screens or limiting lens glare). Ultimately, even AI tools cannot recognize this deception because scammers can find ways to avoid all these distortions.

Hardware authentication will reduce the occurrence of forged images, but in some cases, we still need additional tools to prevent deepfake images from spreading when cameras are hacked or abused. As we discussed earlier, even with hardware-verified cameras, it is still possible for deepfakes to create the false impression that the content is a real image, for reasons such as camera hacking or using a camera to capture deepfaked scenes on computer screens. To address this issue, other tools such as camera blacklists are needed.

Camera blacklists would allow social media platforms and applications to flag images from specific cameras that have been known to generate misleading images in the past. Blacklists can trace the camera's information, such as the camera ID, without publicly disclosing it.

However, it is currently unclear who will maintain the camera blacklist, and it's also unclear how to prevent people from adding the reporting person's camera to the blacklist after accepting bribes (as an act of retaliation).

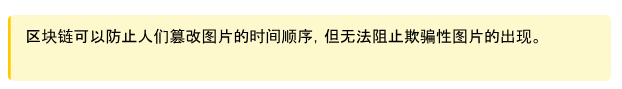

3.1.3.2 Blockchain-based Image Chronology

The blockchain is tamper-proof, so when an image appears on the internet, the image is added to a chronology with a timestamp along with additional metadata, ensuring that the timestamp and metadata cannot be altered. By storing the unedited original image on the blockchain in an immutable manner before it can be maliciously edited and disseminated, accessing such records will allow us to identify malicious edits and verify the original source. This technology has been implemented on the Polygon blockchain network as part of the fact-checking tool Verify developed in collaboration with Fox News.

Figure 15: User interface of the blockchain-based tool Verify by Fox. Artwork can be searched through URL. It retrieves and displays source, transaction hash, signature, timestamp, and other metadata from the Polygon blockchain.

3.1.3.3 Digital Identity

If deepfakes undermine our trust in unverified images and videos, then trustworthy sources may become the only way to avoid false information. We have relied on trusted media sources to verify information because they adhere to journalistic standards, fact-checking procedures, and editorial supervision to ensure accuracy and credibility.

However, we need a way to verify the content we see online comes from sources we trust. This is where the usefulness of digitally signed data comes in: it can mathematically prove the authorship of certain content.

Signatures are generated using digital keys, which are created and generated by wallets and only known by those who possess the associated encrypted wallet. This way, we can know who the author of the data is by simply checking if the signature corresponds to the personal encrypted key belonging to that individual.

We can leverage cryptocurrency wallets to seamlessly and user-friendly attach signatures to our posts. By logging into social media platforms with a cryptocurrency wallet, we can utilize the wallet's functionality to create and verify signatures on social media. Thus, if the source of a certain post is untrustworthy, the platform will be able to alert us - it will use automated signature verification to mark false information.

Furthermore, the zk-KYC infrastructure connected to the wallet can bind unknown wallets to identifications verified through the KYC process without compromising user privacy and anonymity. However, as deepfakes become increasingly sophisticated, the KYC process may be bypassed, allowing malicious actors to create false anonymous identities. This issue can be addressed through solutions like Worldcoin's Proof of Personhood (PoP).

Proof of Personhood is a mechanism used by WorldCoin to verify if a wallet belongs to a real person and only allows one wallet per person. To do this, it utilizes a biometric (iris) imaging device called Orb to verify wallets. Since biometric data cannot be forged, requiring social media accounts to be linked to a unique WorldCoin wallet is a viable method that can prevent bad actors from creating multiple anonymous identities to conceal their unethical online behavior - at least until hackers find ways to deceive biometric devices, it can address the depthfake KYC problem.

3.1.3.4 Economic Incentives

Authors can be penalized for misinformation, and users can be rewarded for identifying incorrect information. For example, "Veracity Bonds" allow media organizations to bet on the accuracy of their publications and face economic penalties for spreading false information. This provides these media companies with an economic incentive to ensure the truthfulness of information.

Veracity Bonds would be an integral part of the "Truth Market," where different systems compete for users' trust by efficiently and reliably verifying the authenticity of content. This is similar to the proof market concept, such as Succinct Network and =nil Proof Market, but focuses on the more challenging problem of authenticity verification.

Cryptography alone is not enough. Smart contracts can be used as a means to enforce the economic incentives necessary to make these "Truth Markets" function, thereby allowing blockchain technology to play a crucial role in combating misinformation.

3.1.3.5 Reputation Scores

We can use reputation to represent trustworthiness. For example, we can look at the number of followers a person has on Twitter to judge whether we should believe what they say. However, reputation systems should consider the past records of each author, not just their popularity. We do not want to confuse credibility with popularity.

We cannot allow individuals to generate unlimited anonymous identities, as they could simply abandon their identity when their reputation is tarnished in order to reset their social credibility. This requires the use of non-duplicable digital identities, as mentioned in the previous section.

We can also utilize evidence from the "Truth Market" and "Hardware Authentication" to determine a person's reputation, as these are reliable methods for tracking their true records. The reputation system is a culmination of all the other solutions discussed so far, making it the most powerful and comprehensive approach.

Figure 16: Musk hinted at the establishment of a website in 2018 to rate the credibility of journal papers, editors, and publications.

3.1.4 Is the encryption solution scalable?

The blockchain solution mentioned above requires a fast and high storage blockchain - otherwise, we cannot include all images in the verifiable time records on the chain. As the amount of online data published every day grows exponentially, this becomes increasingly important. However, there are algorithms that can compress data in a verifiable manner.^1

In addition, signatures generated by hardware authentication are not applicable to edited versions of images: zk-SNARKs must be used to generate editing proofs.<^2> ZK Microphone is an implementation of editing proofs for audio.^4

3.1.5 Deepfakes are not inherently bad

It must be acknowledged that not all deepfakes are harmful. This technology also has innocent uses, such as this video of Taylor Swift teaching math generated by artificial intelligence.^5 Due to the low cost and accessibility of deepfakes, it creates a personalized experience. For example, HeyGen allows users to send videos with an AI-generated face resembling their own.^6

's personal information. Deep simulation also narrows the language gap through voice-over translation to bridge the language gap.

3.1.5.1 Methods of Controlling Deepfakes and Monetizing Them

Artificial intelligence "simulated humans" services based on deepfake technology charge high fees and lack accountability and supervision. The top internet celebrity Amouranth on OnlyFans has released her own digital persona, and fans can interact with her privately. These startup companies can limit or even shut down access, such as the artificial intelligence companion service called Soulmate.

By hosting artificial intelligence models on the blockchain, we can transparently provide funds and control to the models through smart contracts. This will ensure that users never lose access to the models and help distribute profits between contributors and investors. However, there are also technical challenges. The most popular technology for implementing on-chain models, zkML (used by Giza, Modulus Labs, and EZKL), would make the models run 1000 times slower. Nevertheless, research in this subfield continues, and technology is constantly improving. For example, HyperOracle is experimenting with opML, and Aizel is developing solutions based on multi-party computation (MPC) and trusted execution environments (TEE).

3.1.6 Chapter Summary

Complex deepfakes are eroding trust in the political, financial, and social media domains, highlighting the need for a "verifiable network" to uphold truth and democratic integrity.

Deepfakes used to be an expensive and technically intensive task, but with the advancements in artificial intelligence, it has become easy to create, altering the landscape of misinformation.

4 If you are interested in this issue, please contact Arbion.

History teaches us that manipulating the media is not a new challenge, but artificial intelligence has made it easier and cheaper to produce convincing fake news, necessitating new solutions.

Deepfake videos pose a unique danger as they undermine previously reliable evidence, leaving society in a dilemma where genuine actions may be seen as fake.

Existing countermeasures involve awareness, platform, policy, and technological approaches, each facing challenges in effectively combating deepfakes.

Hardware proof and blockchain establish the source of each image and create transparent, immutable editing records, offering promising solutions.

Cryptocurrency wallets and zk-KYC enhance the verification and authentication of online content, while on-chain reputation systems and economic incentives (such as "truth bonds") provide a market for truth.

While acknowledging the positive applications of deepfakes, cryptographic techniques also propose a method of whitelisting beneficial deepfakes, striking a balance between innovation and integrity.

3.2 Bitter Lesson

This sentence goes against common sense, but it is a fact. The AI community refuses to accept the claim that customized approaches are ineffective, but the "bitter lesson" still applies: using the most powerful computational capabilities always produces the best results.

We must scale up: more GPUs, more data centers, more training data.

Computer chess researchers once attempted to build chess engines based on the experiences of top human players, and that's an example of researchers getting it wrong. The initial chess programs simply copied human opening strategies (using "opening books"). The researchers hoped that the chess engine could start from a strong position without having to calculate the best moves from scratch. They also included many "tactical heuristics" - tactics used by human players, such as forks. In simple terms, chess programs were built based on human insights into how to play successfully, rather than general computational methods.

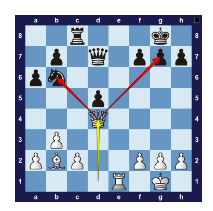

Figure 18: Fork - Queen attacking two pieces

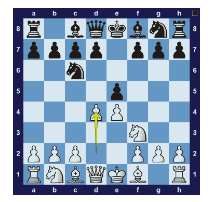

Figure 19: Example of chess opening sequence

In 1997, IBM's DeepBlue, with its massive computational power and search-based techniques, defeated the world chess champion. Although DeepBlue was superior to all "human-designed" chess engines, chess researchers shunned it. They believed DeepBlue's success was merely a flash in the pan because it did not employ chess strategies - a crude solution in their view. They were wrong: in the long run, applying extensive computation to general problem-solving methods often yields better results than custom approaches. This computation-heavy approach

has birthed successful engines in Go (AlphaGo), improved speech recognition, and more robust computer vision technology.

The latest achievement in computation-heavy AI methods is OpenAI's ChatGPT. Unlike previous attempts, OpenAI did not attempt to encode human understanding of language principles into software. Instead, their model combines vast amounts of data from the internet with massive computation. Unlike other researchers, they did not intervene or embed any biases into the software. In the long run, the best-performing methods are always based on leveraging computation. This is a historical fact; in fact, we may have enough evidence to prove that this is always true.

In the long run, combining massive computational power with vast amounts of data is the best approach, thanks to Moore's Law: over time, the cost of computation will exponentially decrease. In the short term, we might not be certain about significant increases in computational bandwidth, which may lead researchers to try improving their techniques by manually embedding human knowledge and algorithms into the software. This approach might work for a while, but in the long run, it will not be successful: embedding human knowledge into underlying software makes it more complex, and models cannot improve based on additional computational power. This renders the artificial approach short-sighted, so Sutton advises us to ignore artificial techniques and focus on applying more computational power to general computing methods.

"The Bitter Lesson" has a tremendous influence on how we should build decentralized artificial intelligence:

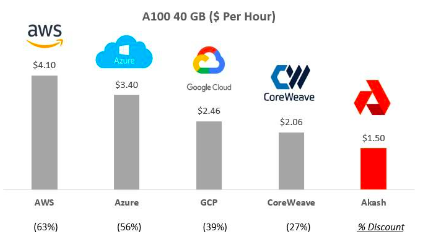

Building Large-scale Networks: The above lessons highlight the development of large-scale artificial intelligence models and the aggregation of significant computational resources for them.The importance of training. These are key steps to enter the new field of artificial intelligence. Akash, GPUNet, and IoNet aim to provide scalable infrastructure.

Figure 20: Comparison of Akash prices with other vendors such as Amazon AWS

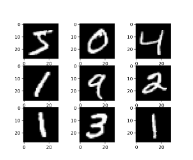

Hardware Innovation: ZKML methods have been criticized for being 1000 times slower than non-ZKML methods. This aligns with the criticism faced by neural networks. In the 1990s, neural networks showed great promise. Yann LeCun's CNN model, a small neural network, was able to classify handwritten digit images (see image below) with success. By 1998, more than 10% of banks in the United States were using this technology to read checks. However, these CNN models were not scalable, leading to a sharp decline in interest, and computer vision researchers began utilizing human knowledge to create better systems. In 2012, researchers developed a new CNN utilizing GPUs (popular hardware typically used for generating computer graphics in games, CGI, etc.), which led to incredible performance surpassing all other available methods at the time. This network was called AlexNet and sparked the revolution of deep learning.

Figure 22: Neural networks in the 90s could only handle low-resolution digit images.

Figure 23: AlexNet (2012) is able to process complex images and outperforms all alternative methods

The advancement of artificial intelligence technology is inevitable, as the cost of computation continues to decrease. Custom hardware for technologies like ZK and FHE will accelerate progress - companies like Ingonyama and the academic community are paving the way. In the long run, we will achieve large-scale ZKML through the application of greater computational power and improved efficiency. The only question is, how will we utilize these technologies?

Figure 24: An example of hardware advancement in ZK Prover. (Source)

Expanded Data: As the scale and complexity of artificial intelligence models grow, it is necessary to expand the datasets accordingly. Generally, the size of the dataset should grow exponentially with the model size to prevent overfitting and ensure stable performance. For a model with billions of parameters, this often means planning datasets that include billions of tokens or samples. For example, Google's BERT model was trained on the entire English Wikipedia, which contains over 2.5 billion words, and the BooksCorpus, which contains around 800 million words. Meta's LLama, on the other hand, was trained on a dataset of 1.4 trillion words. These numbers emphasize the scale of the datasets we need - as models evolve towards trillions of parameters, the datasets must further expand. This expansion helps ensure that the models can capture subtle differences and diversity in human language, making the development of large, high-quality datasets just as important as architectural innovations in the models themselves. Companies such as Giza, Bittensor, Bagel, and FractionAI are meeting these unique demands in the field (see Chapter 5 for challenges in the data domain, such as model decay, adversarial attacks, and quality assurance).

Developing General Approaches: In the decentralized artificial intelligence field, specific application-oriented approaches like ZKPs and FHE are adopted for immediate efficiency gains. Customizing solutions tailored to specific architectures can enhance performance but may sacrifice long-term flexibility and scalability, limiting broader system evolution. In contrast, focusing on general approaches provides a foundation that, while initially less efficient, is scalable and adaptable to various applications and future development. With trends like Moore's Law driving computational growth and cost reductions, these approaches are bound to shine. Choosing between short-term efficiency and long-term adaptability is crucial. Emphasizing general approaches can prepare the decentralized AI for the future, making it a robust and flexible system that leverages advancements in computational technology to ensure lasting success and relevance.

3.2.1 Conclusion

In the early stages of product development, it may be crucial to choose a method that is not limited by scale. This is important for both companies and researchers to assess use cases and ideas. However, painful lessons have taught us that in the long run, we should always prioritize choosing a general and scalable approach.

Here is an example where a manual method has been replaced by automated, general differentiation: before using autodiff libraries like TensorFlow and PyTorch, gradients were often computed manually or through numerical differentiation - a method that is inefficient, error-prone, and problematic, wasting researchers' time. Autodiff, on the other hand, has become an indispensable tool as it speeds up experimentation and simplifies model development. Thus, the general solution wins - but before autodiff became a mature and available solution, the old manual methods were a necessary condition for conducting ML research.

In conclusion, Rich Sutton's "Bitter Lesson" tells us that if we maximize the computational powers of artificial intelligence instead of trying to make AI emulate human-known methods, progress in AI will be faster. We must expand existing computing capabilities, scale data, innovate hardware, and develop general methods - adopting this approach will have profound implications for the decentralized AI field. Although the "Bitter Lesson" may not apply to the early stages of research, it may always be correct in the long run.

3.3 AI Agents Will Disrupt Google and Amazon

3.3.1 Google's Monopoly Issue

Online content creators typically rely on Google to publish their content. In return, if they allow Google to index and display their work, they can gain a constant stream of attention and advertising revenue. However, this relationship is imbalanced; Google holds a monopoly position (over 80% of search engine traffic) that content creators themselves cannot reach. Therefore, content creators' income heavily depends on Google and other tech giants. One decision by Google could potentially spell the end of individual businesses.

Google's introduction of Featured Snippets function- Displaying the answer to the user's query without having to click into the original website - highlights this problem, as now information can be obtained without leaving the search engine. This disrupts the rules upon which content creators rely for survival. As a condition for being indexed by Google, content creators hope that their websites can receive recommended traffic and attention. Instead, the Featured Snippets feature allows Google to summarize content while excluding creators from the traffic. The fragmentation of content producers renders them practically powerless to collectively oppose Google's decision; without a unified voice, individual websites lack bargaining power.

Figure 25: Example of Featured Snippets Functionality

Google has further experimented by providing a list of sources for user query answers. The following example includes sources like The New York Times, Wikipedia, MLB.com, and more. Due to Google directly providing the answers, these websites won't get as much traffic.

Figure 26: Example of "From the Web" Functionality

3.3.2 Monopoly Issue with OpenAI

The "Featured Snippets" functionality introduced by Google represents a concern