Blockchain scalability has been a hotly debated topic. Almost every blockchain network has high transaction per second (TPS) as a selling point. However, TPS is not a valid metric for comparing blockchain networks—making it a challenge to assess their relative performance. Also, large TPS numbers often come at a cost — which raises the question: are these networks actually scaling, or are they just increasing their throughput?

secondary title

Not all transactions consume the same gas

First, we need to confirm our assertion that the simple and convenient TPS metric is not an accurate measure of scalability.

To compensate nodes for executing transactions (and to stop users from cluttering the network with unnecessary computation), the blockchain charges a fee proportional to the computational burden. In Ethereum, the complexity of a computational burden is measured in gas. Because gas is such a convenient measure of transaction complexity, this term will also be used for non-Ethereum blockchains in this article.

Transactions vary widely in complexity, and therefore, the gas they consume varies widely. Bitcoin, the pioneer of trustless peer-to-peer transactions, supports only basic Bitcoin script. These simple transfers from address to address use very little gas. In contrast, smart contract chains like Ethereum or Solana support virtual machines and Turing-complete programming languages that allow for more complex transactions. Therefore, dApps like Uniswap require more gas.

That's why comparing the TPS of different blockchains is meaningless. What we should be comparing is computing power or throughput.

secondary title

What limits scalability?

Blockchain strives to be the most decentralized, open and inclusive network possible. In order to achieve this goal, two fundamental properties must be maintained.

● Hardware requirements

The decentralization of a blockchain network is determined by the ability of the weakest node in the network to validate the blockchain and maintain its state. Therefore, the cost (hardware, bandwidth, and storage) of running a node should be kept as low as possible to enable as many individuals as possible to be permissionless participants in a trustless network.

● state growth

secondary title

Adverse effects of increased throughput

● node

The minimum requirements and number of nodes to run a node.

Bitcoin¹: 350GB hard drive space, 5 Mbit/s connection, 1GB RAM, CPU>1 Ghz. Number of nodes: ~10,000

Ethereum². More than 500GB SSD disk space, 25Mbit/s connection, 4-8GB memory, CPU 2-4 cores. Number of nodes: ~6,000

Solana³. 1.5TB or more of SSD disk space, 300 Mbit/s connection, 128GB of memory, CPU with 12 or more cores. Number of nodes: ~1,200

Note that the greater the CPU, bandwidth, and storage requirements of the nodes for the throughput of the blockchain, the fewer nodes will be on the network, which will lead to weaker decentralization and poorer network inclusiveness.

● Synchronize a full node time

When running a node for the first time, it must sync with all existing nodes, downloading and validating the state of the network, from the genesis block to the tip of the chain. This process should be as fast and efficient as possible to allow anyone to be a permissionless participant in the protocol.

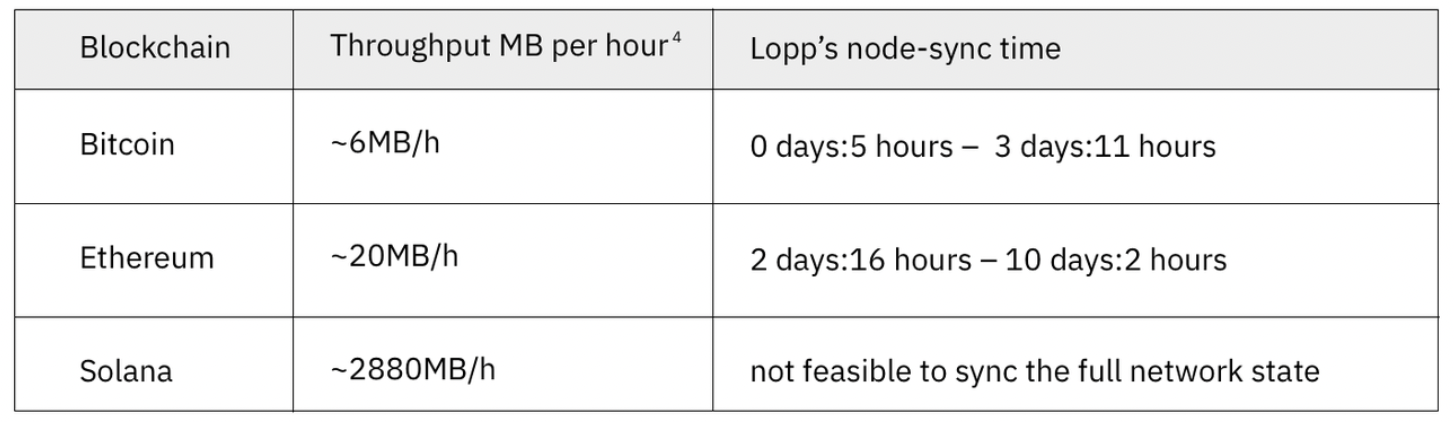

image description

Table 1. Blockchain throughput and node synchronization comparison

Table 1 shows that an increase in throughput leads to a longer synchronization time as more and more data needs to be processed and stored.

secondary title

How should scalability be defined?

Scalability is the most misused term in the blockchain space. While increasing throughput is desirable, it is only one piece of the puzzle.

Scalability means that more transactions can be executed on the same hardware.

For this reason, scalability can be divided into two categories:

● Extensibility of the sequencer

Sequencers describe the ordering and processing behavior of transactions in the network. As mentioned earlier, any blockchain can increase its throughput by increasing the block size and shortening the block time, until this approach has a large negative impact on the degree of decentralization of the network. However, just tweaking these simple parameters did not provide the desired improvement. Ethereum's EVM can theoretically handle up to about 2,000 TPS, which is not enough to meet long-term block space requirements. In order to scale sorting, Solana has made some impressive innovations: using a parallel execution environment and clever consensus mechanism, the efficiency of throughput is greatly improved. But these improvements are not enough to meet the demand for throughput, nor does it have the scalability of the sorter. As Solana increases its throughput, so does the hardware cost to run a node and process transactions.

● Verify scalability

secondary title

What are Validity Rollups?

Validity Rollups (also known as"ZK-Rollups"secondary title

Under the same hardware requirements, how does Validity Rollups scale?

Even if provers do require high-end hardware, they do not affect the decentralization of the blockchain; since the validity of transactions is guaranteed by mathematically verifiable proofs.

What matters is the requirement to verify the proof. Since the data involved is highly compressed and abstracted heavily through computation, its impact on the underlying blockchain nodes is minimal.

Validators (Ethereum nodes) do not require high-end hardware, and the batch size does not increase hardware requirements. Only state transitions and a small amount of calldata need to be processed and stored by the nodes. This allows all Ethereum nodes to use their existing hardware for validation.

The more transactions, the lower the price.

In a traditional blockchain, the more transactions there are, the more expensive it is for everyone as the block space gets filled up. Users need to outbid each other in the fee market for their transactions to be included in blocks.

This situation is reversed in Validity Rollup. There is a cost to verifying a batch of transactions on Ethereum. As the number of transactions in a batch increases, the cost of validating the batch grows logarithmically. But adding more transactions in a batch results in cheaper transaction fees, even if the verification cost of the batch increases. Because these costs are amortized across all transactions within a batch, Validity Rollup wants to have as many transactions as possible within a batch. When a batch size grows to infinity, the amortized fee per transaction will converge to zero, that is, the more transactions included in the Validity Rollup, the cheaper the transaction fee per user.

dYdX, a dApp powered by Validity Rollup, regularly sees batches of over 12,000 transactions. Comparing the gas consumption of the same transaction on the main network and on the Validity Rollup, we can see the improvement of scalability.

Settle a dYdX transaction on the Ethereum mainnet: 200,000 gas

To settle a dYdX trade on StarkEx:<500 gas

secondary title

Why Optimistic Rollup (OP) doesn't scale as well as people think?

In theory, the OP has almost the same scalability benefits as Validity Rollups. But there is an important difference. The OP optimizes for the average case, while Validity Rollups optimizes for the worst case. Because blockchain systems operate under extremely adversarial conditions, optimizing for worst-case scenarios is the only way to achieve security.

The worst case the OP has is that the user's transaction won't be checked by the fraud checker. Therefore, in order to challenge fraud, a user must synchronize an Ethereum full node and an L2 full node, while computing suspicious transactions themselves.

In Validity Rollups, even in the worst case, users only need to synchronize an Ethereum full node to verify the validity proof, saving their own calculation burden.

Compared to Validity Rollups, the cost of OP scales linearly with the number of transactions rather than the number of users, making OP more expensive.

Permission-free access to Rollup state

in conclusion

in conclusion

Many approaches to blockchain scalability mistakenly focus on increasing throughput. However, this ignores the throughput impact on nodes: the ever-increasing hardware requirements to process blocks and store network history, and how this inhibits decentralization of the network.

zCloak Network is a private computing service platform based on the Polkadot ecosystem, which uses the zk-STARK virtual machine to generate and verify zero-knowledge proofs for general computing. Based on the original autonomous data and self-certifying computing technology, users can analyze and calculate data without sending data externally. Through the Polkadot cross-chain messaging mechanism, data privacy protection support can be provided for other parallel chains and other public chains in the Polkadot ecosystem. The project will adopt the "zero-knowledge proof-as-a-service" business model to create a one-stop multi-chain privacy computing infrastructure.

About zCloak Network

zCloak Network is a private computing service platform based on the Polkadot ecosystem, which uses the zk-STARK virtual machine to generate and verify zero-knowledge proofs for general computing. Based on the original autonomous data and self-certifying computing technology, users can analyze and calculate data without sending data externally. Through the Polkadot cross-chain messaging mechanism, data privacy protection support can be provided for other parallel chains and other public chains in the Polkadot ecosystem. The project will adopt the "zero-knowledge proof-as-a-service" business model to create a one-stop multi-chain privacy computing infrastructure.